DeepConf Revolution: More Precision and Fewer Resources for LLMs

Imagine you're in a particularly difficult math exam. You're facing a problem that makes you break out in a cold sweat, the kind from the Math Olympiads that makes even the brightest cry. The classic strategy? Trial and error, writing dozens of different attempts hoping one is right. But there's a smarter approach: understanding when you're going in the wrong direction and stopping before you waste time and energy.

This is exactly what a young team of researchers from Meta AI, led by Jiawei Zhao, a researcher at Meta AI FAIR with a background from the prestigious Caltech, along with colleagues Yichao Fu and Xuewei Wang, has done.

Their work, published on arXiv just a few weeks ago under the title "Deep Think with Confidence", represents one of those discoveries that seem simple in hindsight but actually hide considerable technical complexity. Like Archimedes' seemingly trivial gesture in the bathtub, this research also starts from a basic observation: if an artificial intelligence is reasoning poorly, why not teach it to recognize it on its own?

The Problem: When "Thinking More" Isn't Enough

To understand the revolutionary scope of this approach, let's take a step back. Large language models, which we all call LLMs, have a particular characteristic when they face complex problems: the more attempts they make, the better their answers become. It's as if they were particularly stubborn students who keep retrying an exercise until they get it right.

This approach, technically called "test-time scaling," works with a seemingly simple logic: if you have the model generate fifty different solutions to a problem and then choose the most frequent one among them, the chances of getting the right one increase dramatically. This is the principle of "self-consistency with majority voting," a strategy that has worked very well for years.

But there's a problem, actually two. The first is economic: generating dozens or hundreds of answers costs a fortune in terms of computational power. It's like renting fifty computers to do the same calculation hoping that the majority will arrive at the right result. The second problem is more subtle: after a certain point, adding more attempts does not significantly improve the results. It's the classic "law of diminishing returns" that economists know well, applied to the world of artificial intelligence.

The Solution: From "Stronger" to "Smarter"

And this is where the brilliant insight of the Meta team comes into play. Instead of continuing to hammer the problem with computational brute force, why not teach the model to recognize when it's about to go down the wrong path? It's a bit like the proximity radar in modern cars: instead of waiting for the impact, it warns you before you're about to crash into an obstacle.

DeepConf, the name of the new method, leverages what researchers call "internal model confidence signals." In simple terms, every time an LLM generates a word or a concept, it has a kind of "internal thermometer" that indicates how sure it is of that choice. It's like when you answer a quiz question: sometimes you're 100% sure, other times you hesitate between two options.

The brilliance of DeepConf lies in transforming this internal "hesitation" into an intelligent filter. Instead of blindly generating hundreds of attempts and then counting them one by one, the system monitors the model's confidence in real time and automatically discards reasoning that shows signs of too high uncertainty. It's like having a personal assistant who whispers in your ear "maybe you should try another approach" when they see you getting stuck on a wrong solution.

How It Works: The Secrets of the New Architecture

From a technical point of view, DeepConf works on two complementary levels. The first is what the researchers call "filtering during generation." Imagine being Sherlock Holmes who, while reasoning aloud, realizes he's on the wrong track and immediately changes direction. This is exactly what DeepConf does: it monitors the model's internal log probabilities token by token and, when it detects patterns of uncertainty, it interrupts that particular line of reasoning and starts a new one.

The second level is "filtering after generation," which works more like an expert editor. Once the model has generated several complete solutions, DeepConf retrospectively analyzes the confidence signals of each reasoning trace and assigns them a reliability score. It's like having a proofreader who doesn't just count errors, but evaluates the overall consistency of the reasoning.

The real magic, however, lies in its implementation simplicity. As the authors point out in their paper, DeepConf requires no additional model training or hyperparameter optimization. It is a "plug-and-play" approach that can be integrated into existing serving frameworks like vLLM without substantial changes to the architecture. It's like installing new software on your computer: you don't have to change the hardware, it works with what you already have.

The Astonishing Numbers

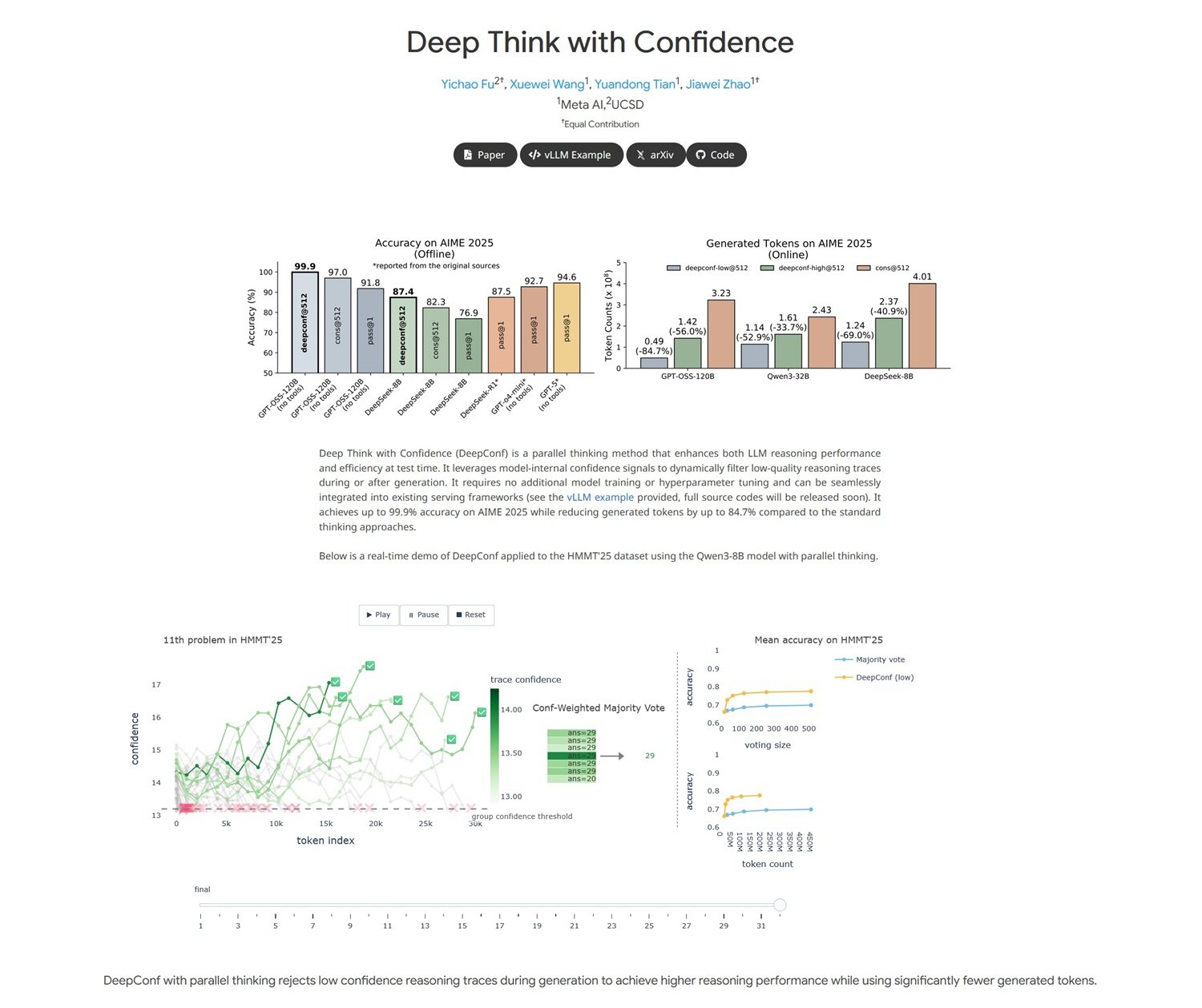

The results obtained by the Meta team are nothing short of impressive, with that "too good to be true" flavor that characterizes truly innovative discoveries. On AIME 2025, one of the most difficult benchmarks for mathematical reasoning (think of it as the final exam for artificial intelligences), DeepConf achieved an accuracy of 99.9% while simultaneously reducing token usage by 84.7% compared to traditional methods.

To understand the scope of these numbers, let's make a cinematic comparison. It's as if someone had invented a way to shoot Hollywood-quality films using a tenth of the usual budget, while maintaining the same visual and narrative quality. In the world of AI, where every generated token has a measurable computational cost, a reduction of 85% literally means cutting operating costs by an order of magnitude.

But it's not just an economic issue. The most fascinating aspect is that DeepConf manages to improve performance precisely by eliminating computational "noise." It's counterintuitive: normally, in computer science, the more resources you throw at a problem, the better results you get. Here, the opposite happens: by removing low-quality attempts, the signal emerges more clearly from the background noise.

The tests were conducted on the most advanced open-source models, including Qwen 3 and the GPT-OSS series, demonstrating that the approach works across different architectures. It's like discovering that a trick works on both iPhone and Android: it means you've probably found something fundamental.

Two Modes, One Goal

DeepConf operates in two distinct modes, like a sports car that can run in both eco and performance mode. The "offline" mode analyzes all generated reasoning traces and then selects those with the best confidence signals. It's perfect for applications where you have time to think and want the highest possible accuracy.

The "online" mode, on the other hand, is designed for real-time applications where speed is crucial. In this case, DeepConf dynamically interrupts reasoning traces that show signs of low confidence and starts new ones on the fly. It's like having an intelligent GPS that, instead of continuing to calculate a route it knows is wrong, immediately changes course to a more promising destination.

The flexibility of this approach is one of its strengths. Developers can calibrate the system according to their specific needs: more conservative for critical applications where errors are not tolerable, more aggressive for use cases where speed prevails over absolute perfection.

Practical Impact: The Economic Revolution

The economic impact of DeepConf could be devastating for the AI industry, in a good way. Think about the implications: if you can get the same performance from a system that costs $1000 a day with one that costs $150, suddenly services that were previously economically unsustainable become accessible to a much wider audience of users and companies.

But it's not just a matter of direct costs. The reduction in generated tokens also means lower CO2 emissions, less stress on data centers, and, ultimately, a more environmentally sustainable AI. It's like switching from an SUV that consumes 15 liters per 100 kilometers to a hybrid car that consumes 4, while maintaining the same speed and comfort.

For companies offering LLM-based services, DeepConf represents a potential competitive game-changer. Whoever manages to implement it first will be able to offer higher quality services at lower prices, creating the kind of competitive advantage that redefines entire industries. It's the classic "disruption" that Clayton Christensen talks about, applied to the world of artificial intelligence.

Future Perspectives: Towards a Self-Aware AI

But perhaps the most fascinating aspect of DeepConf is not even the immediate results, but what it represents as a research direction. For the first time, we have a system that doesn't just generate answers, but develops a primitive form of metacognition: the ability to reflect on its own thought processes.

It is an important step towards what researchers call "self-aware AI," systems that not only solve problems but are also aware of how they are solving them and, above all, when they are failing. We are not talking about consciousness in the science fiction sense of the term, but a form of procedural intelligence that knows when to trust itself and when to be skeptical.

The Meta team has shown that this type of "constructive self-doubt" can be implemented without overhauling existing architectures, paving the way for a new generation of more efficient and economical models. It's as if we've found a way to make machines not only smarter, but also wiser in the sense of knowing their own limits.

Looking to the future, DeepConf could be just the appetizer for a broader revolution. If machines can learn to doubt their own answers in the mathematical field, what prevents them from applying the same principle to creative writing, ethical problem solving, or even scientific research? The path to a truly general-purpose artificial intelligence may well pass through this capacity for constructive self-criticism.

The work of Zhao and his colleagues shows that sometimes the most important revolutions are born from the simplest insights. In a world where everyone is chasing ever larger and more powerful models, they have chosen to focus on efficiency and self-awareness. As the good old Einstein would say, "everything should be made as simple as possible, but not simpler." DeepConf seems to have perfectly struck this balance, opening new frontiers for a smarter, more sustainable, and, paradoxically, more human AI in its ability to question itself.