The Internet That Eats Its Own Tail: When AI Generates Junk That Feeds Other AI

There's a scene in John Carpenter's "The Thing" where the alien assimilates terrestrial organisms, creating increasingly degraded, less-than-perfect copies. Each iteration loses something of the original until the distinction between authentic and replica becomes impossible. It’s a powerful image to describe what is happening to the digital ecosystem: artificial intelligence is consuming human content to regenerate it in an increasingly corrupt form, fueling a cycle of progressive degradation that scientists call "model collapse" but which we could more simply define as the internet eating its own tail.

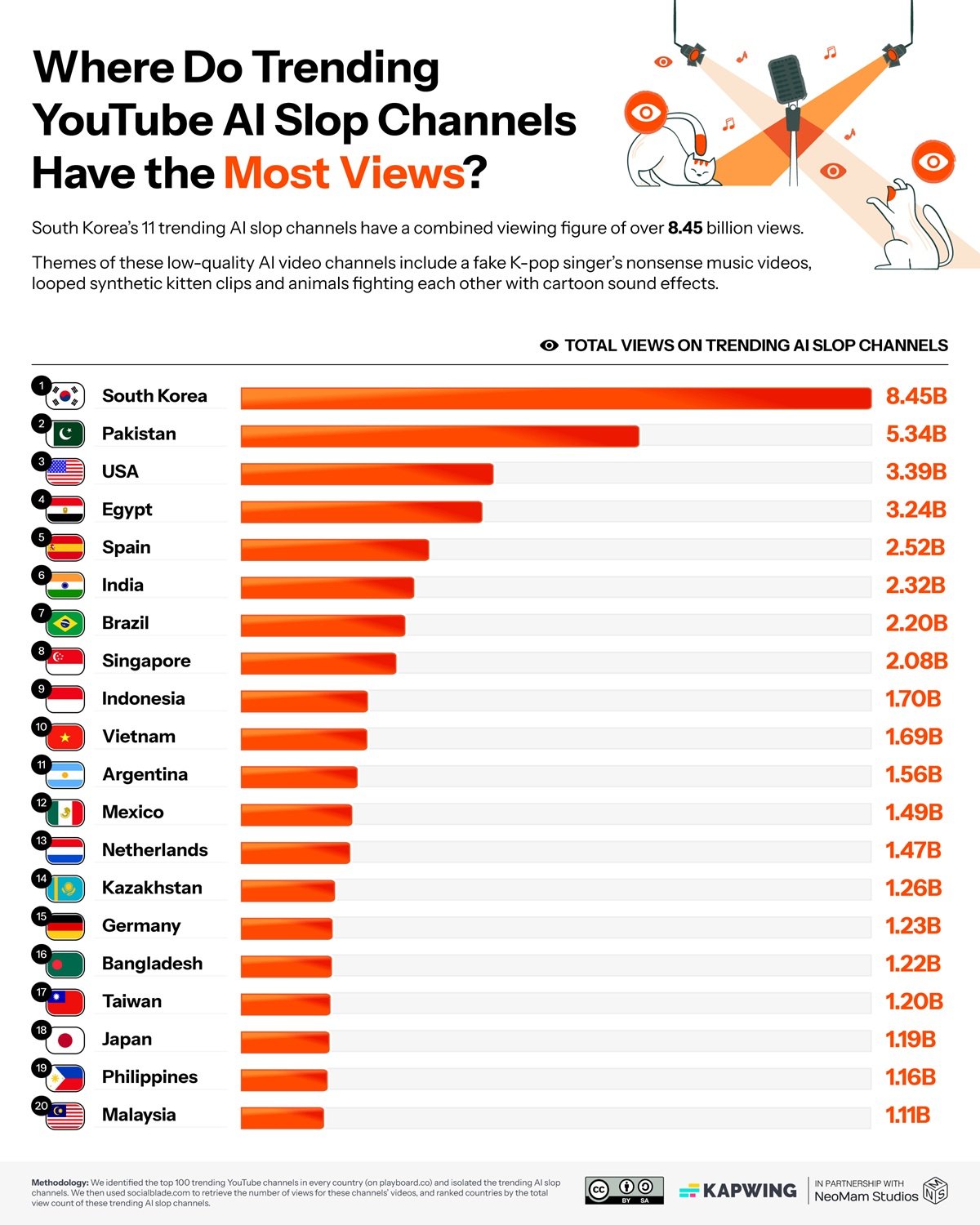

The numbers collected by Kapwing in their latest report have the brutal precision of a medical diagnosis: between 21% and 33% of the content that YouTube suggests to new users is what the industry jargon calls "AI slop," junk generated by artificial intelligence. We are not talking about quality AI content, but about that low-cost industrial production that has only one purpose: to maximize views to generate advertising revenue. Channels like Cuentos Fascinantes in Spain have amassed over twenty million subscribers with entirely synthetic videos, while in South Korea this type of content has reached eight billion views. The slop economy is already worth one hundred and seventeen million dollars a year, with individual channels earning over four million.

The machine works in a simple but effective way. YouTube's algorithms reward novelty and immediate engagement. AI-generated content, mass-produced at very low costs, can saturate the platform with infinite variations of the same theme. a traditional creator takes hours or days to produce a video. An AI system can churn out dozens in the same amount of time, testing multiple variations to identify the one that works best. 54% percent of the first videos seen by new users belong to this category, creating a sort of distorted gateway to the platform.

Neal Mohan, CEO of YouTube, told Time magazine that artificial intelligence represents "the next frontier" for the platform. In a reorganization announced in October 2024, YouTube restructured its product and engineering divisions precisely to align with this AI-centric vision. But there is a disturbing inconsistency: while the company invests heavily in AI tools for creators, its own automated moderation systems are causing mass blocks of legitimate channels, with creators seeing their accounts banned while the channels that stole their content remain active. It is as if YouTube were simultaneously feeding and fighting the same phenomenon, trapped in a systemic contradiction that closely resembles the dynamics of a technology monopoly that the European Union is trying to regulate.

The Growing Entropy

But the problem of slop is only the superficial manifestation of something much deeper. While YouTube is filling up with synthetic videos, LinkedIn has reached an even more critical point: according to an analysis by Originality.AI, 54% percent of long-form posts on the professional platform are generated by artificial intelligence. A 189% jump compared to the pre-ChatGPT period. We are not talking about writing assistance, but about entirely artificial content presented as original thought. LinkedIn's algorithms now reward this massive production, creating a feed where distinguishing the authentic from the synthetic becomes increasingly difficult.

The issue is no longer just qualitative but structural. A paper published on arXiv scientifically documents the phenomenon of "model collapse," the informational entropy that is generated when artificial intelligence models are trained on data produced by other AI models. The authors demonstrate that this practice causes a progressive loss of diversity: the semantic variety measured in bits per token drops from 4.2 to 2.5 in just a few training iterations. It's like making photocopies of photocopies: each generation loses details, nuances, complexity.

The mechanism is subtle. Current models have been trained on billions of texts produced by humans over decades of online activity. But that mine of original data is running out. A study published in Nature estimates that the "budget" of human content available for training will be exhausted between 2026 and 2032, depending on the scenarios. After that, new models will necessarily have to feed on content generated by previous AI. And this is where the trap is sprung: each training cycle on synthetic data amplifies errors, reduces variety, homogenizes the output. As in a population that reproduces only within itself, genetic diversity collapses.

The Digital Ouroboros Serpent

YouTube's slop and model collapse are not separate phenomena but two sides of the same coin. The junk videos generated today will become part of the datasets with which tomorrow's models will be trained. LinkedIn's AI posts will be indexed, archived, used as training material. It's a perfect feedback loop: low-quality content generates corrupt data that produces worse models that in turn create even more degraded content. A snake that eats its own tail, like the alchemical Ouroboros, but without any promise of rebirth.

The practical implications are already measurable. Research published in JMIR has identified that 5.3 percent of biomedical educational content on YouTube contains AI-generated information of dubious quality. We are not talking about entertainment but about material that doctors, students and patients consult to make health decisions. The "illusory truth" effect is well documented: repeating false or inaccurate information increases its perceived credibility. If YouTube continues to pump out unverified AI content, we are building a disinformation infrastructure that will become increasingly difficult to dismantle.

On an economic level, slop creates profound distortions. The one hundred and seventeen million dollars it generates annually is not new wealth: it is diverted from legitimate creators to those who have figured out how to "game" the algorithmic system with low-cost industrial production. It is a form of adverse selection: the algorithm rewards those who produce in quantity at the expense of those who produce in quality, creating a perverse incentive that pushes the entire ecosystem downwards. And while this is happening, giants like Google continue to redefine their relationship with AI, investing heavily in a technology that at the same time threatens to cannibalize the very foundations of the web they helped build.

Normalization is perhaps the most insidious aspect. When half of the content on LinkedIn is AI and a third of YouTube is slop, our critical sense adapts. We begin to expect that patina of artificiality, that lack of authenticity. The risk is that we are training a generation of users to no longer distinguish, to no longer seek the original. And when you no longer seek the original, you stop valuing it and therefore producing it.

The Invisible Winners

While the digital ecosystem is filling up with junk and models risk collapse, there is a parallel economy that thrives on this chaos. Nvidia closed the third fiscal quarter of 2026 with fifty-seven billion dollars in revenue, a sixty-two percent increase year-on-year. The company controls 92% of the GPU market and monetizes every single AI generation, whether it's quality content or slop. CEO Jensen Huang talks about "incredible demand" and "sold out" cloud GPUs, projecting investments in AI infrastructure of between three and four trillion dollars by 2030.

It's not just Nvidia. Cloud providers like AWS, Azure and Google Cloud bill billions from companies that train models and generate content. OpenAI and Anthropic raise hundreds of millions in funding just as the web fills up with the content their models produce. It's a perfect paradox: the more AI content is generated, the more computing power is needed to train subsequent models on increasingly polluted datasets, generating growing profits for the infrastructure that fuels the problem itself. The YouTube sloppers who earn four million are the visible tip of the iceberg. Below the surface, there is an industry worth tens of billions that monetizes every watt of energy spent generating and processing content, regardless of its quality or usefulness.

Towards Which Ecosystem

But there are concrete alternatives that already work. Common Crawl, despite recent controversies over paywall management, remains the largest public archive of web data and is used in 64% of language models. The crucial difference lies in the curation: filtered versions like Google's C4 or EleutherAI's Pile-CC apply aggressive cleaning processes. NVIDIA has developed Nemotron-CC, a 6.3 trillion token dataset built through ensembles of classifiers and synthetic rephrasing techniques that maintain quality while expanding quantity.

Cohere Labs is building radically different alternatives with the Aya project, an open science initiative that has involved three thousand researchers from 150 countries to create manually curated multilingual datasets in 101 languages. Not blind automation, but human curation on a large scale. The Data Provenance Explorer, developed by MIT and Cohere Labs, has tracked and verified two thousand of the most used datasets, creating for the first time a traceability system that documents licenses, creators and data lineage. Platforms like Substack have built economic models that reward verified human authenticity, demonstrating that there are markets willing to pay for certified original content.

Watermarking AI content remains a partial but necessary solution. The European Union, with the AI Act, has begun to impose transparency obligations. YouTube has announced labeling systems, although implementation remains uneven. But the issue is not just technological. Structural interventions are needed: platforms must rethink algorithms that reward immediate engagement by creating perverse incentives. Training datasets require extreme curation, prioritizing verified human sources. And massive investment in media literacy is needed: recognizing AI content is no longer optional but a fundamental competence.

The critical point is that we are at a crossroads. We can continue on the current trajectory, where every year a larger percentage of the web becomes AI-generated, where models are trained on the output of other models, where informational diversity progressively collapses to create a homogeneous, predictable, sterile digital ecosystem. Or we can intervene now, with intelligent regulation, conscious technical choices and a renewed appreciation for authentic human content.

YouTube's bet is that AI can democratize creation, lowering technical barriers and allowing anyone to become a creator. But if that democratization mainly produces junk that pollutes the ecosystem and fuels the collapse of future models, we are not democratizing anything: we are just accelerating a systemic degradation. As in Carpenter's alien, each copy becomes less perfect than the previous one. And unlike the movie, there is no Kurt Russell with a flamethrower ready to stop the assimilation.

The question is no longer whether AI will transform the internet. That transformation is already underway. The question is: what are we transforming it into? Into a richer, more varied and accessible system, or into an echo chamber where machines speak mainly to other machines, while we humans become increasingly passive spectators of an informational deterioration that we have set in motion but which we are struggling more and more to control?