Qwen3-Max-Preview: The 1 Trillion Parameter Behemoth

September 5, 2025, marks a watershed date in the evolution of global artificial intelligence. Alibaba has officially unveiled Qwen3-Max-Preview, the first model in the Qwen family to surpass the psychological threshold of one trillion parameters. A milestone that is not just numerical, but represents China's entry into the exclusive club of technological powers capable of competing at the highest levels in the generative AI arena.

Like Akira awakening from the cryostat in Otomo's masterpiece, this computational behemoth emerges at a time when the industry seemed to be leaning towards more compact and efficient models. Alibaba's choice to aim high, very high, is a statement of intent that overturns market expectations and reignites the race to extreme sizes.

A Trillion Reasons to Be Amazed

The numbers for Qwen3-Max-Preview are impressive even by today's standards. With over 1 trillion parameters, the model handles a context window of 262,144 tokens, divided into 258,048 input tokens and 32,768 output tokens. To put these numbers in perspective, it means being able to simultaneously process the equivalent of about 500 pages of text, a capacity that opens up application scenarios that were unthinkable until yesterday.

The architecture also integrates context caching capabilities, an engineering solution that significantly speeds up multi-turn sessions by reducing latency in prolonged conversations. It's like having an assistant who not only remembers everything you've told them but also keeps it always at hand without having to start from scratch every time.

Binyuan Hui, Staff Research Scientist of the Qwen Team, confirmed that "Qwen-Max has successfully scaled to 1T parameters and development continues to advance," hinting that this Preview version might just be an appetizer for something even more ambitious.

When Saitama Enters the Arena

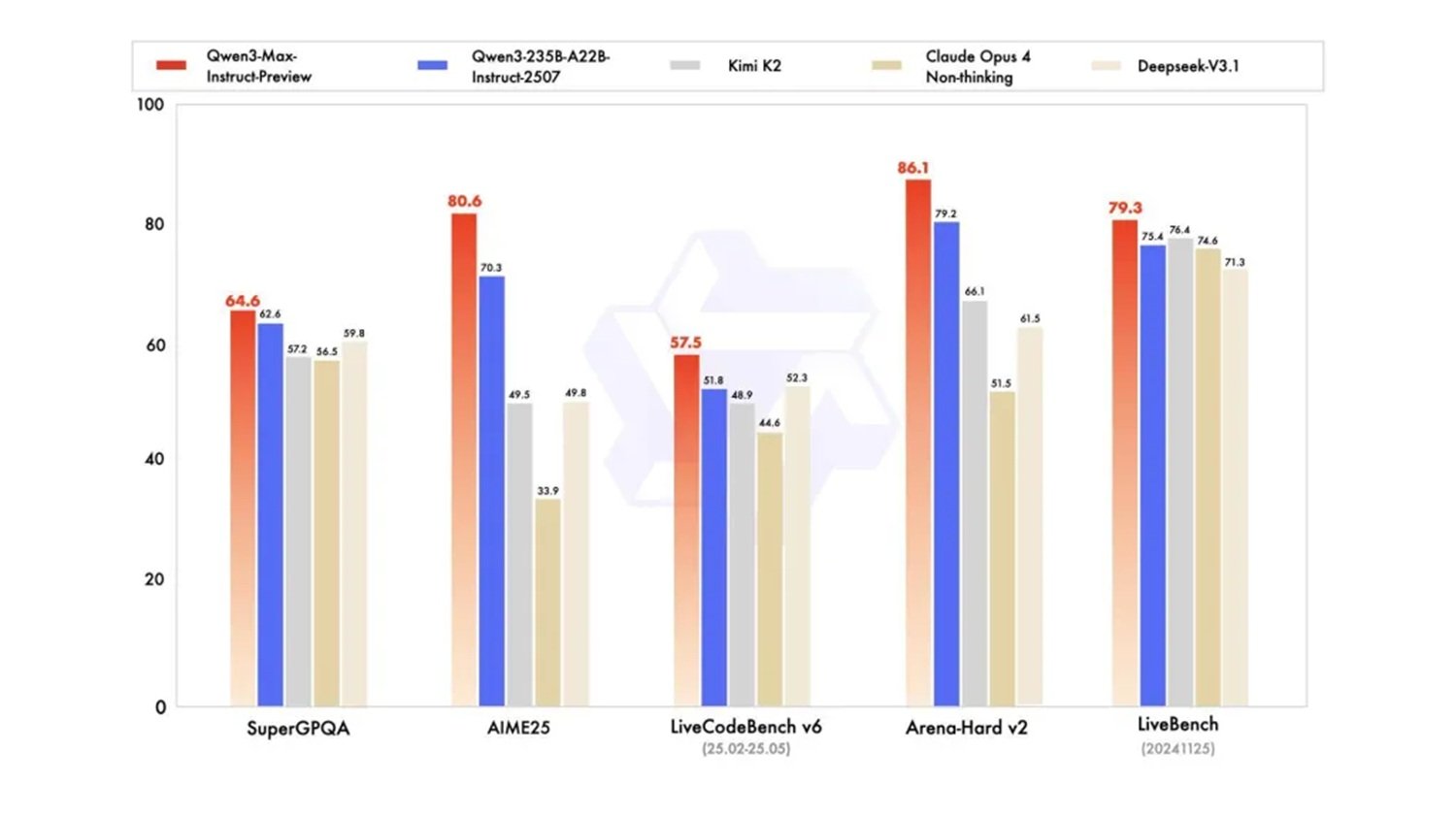

The benchmarks speak for themselves and certify the performance of this Chinese giant. Qwen3-Max-Preview surpasses the company's previous flagship model, Qwen3-235B-A22B-2507, and competes directly with the best Western models on standardized tests such as SuperGPQA, AIME25, LiveCodeBench v6, Arena-Hard v2, and LiveBench.

Particularly significant is the comparison with Claude Opus 4, GPT-4, and other leading models, where Qwen3-Max-Preview demonstrates competitive if not superior performance in several categories. Like Saitama in One Punch Man, this Chinese model has come almost out of nowhere to compete with established champions, bringing a computational power that redefines the industry's balance.

Early testers report surprising response speeds, sometimes faster than ChatGPT, and emergent reasoning behaviors that were not specifically programmed. Ahsen Khaliq of Hugging Face documented the model's creative ability by generating a pixelated voxel garden with a single prompt, demonstrating versatility that goes far beyond traditional benchmarks.

The Great Betrayal of Open Source

But this is where the story takes an unexpected turn. Unlike previous Qwen releases, this model will not be available in an open-source version. Access is limited exclusively to Alibaba Cloud APIs, Qwen Chat, OpenRouter, and as a default tool in Hugging Face's AnyCoder.

This turn represents a paradigm shift for Alibaba, which in the past had built its reputation in AI precisely on the philosophy of open access. Previous Qwen models had challenged Western labs precisely because of the immediate availability of the model's weights, allowing researchers and developers around the world to experiment and contribute to performance improvements.

The choice of closed source raises profound questions about the future of distributed innovation in AI. While the desire to monetize such massive investments is understandable, it also sets a worrying precedent for the global research ecosystem. It's as if the Library of Alexandria suddenly decided to close its doors to the public, maintaining access only for paying customers.

The Time Factor in the AI Era

The timing of the Qwen3-Max-Preview launch is not accidental and reveals a sophisticated temporal strategy that deserves in-depth analysis. While the Western industry was focusing on more efficient and compact models following the "less is more" philosophy, Alibaba bet on the opposite direction, investing heavily in a computational scale that is now proving to be a winner. It's a move reminiscent of Kamoshida's strategy in Persona 5: while all the other players were following conventional rules, someone decided to completely change the game.

The research team's announcement of updates "as early as next week" suggests an incredibly accelerated development cycle, possible only thanks to industrial-scale computational and human resources. This pace of innovation presents Western competitors with an unprecedented challenge: competing not only on the quality of a single model but on the speed of iteration and continuous improvement.

The strategic integration with already established platforms like OpenRouter and Hugging Face's AnyCoder demonstrates a mature understanding of the global developer ecosystem. Alibaba is not just launching a product; it is building a bridge to the international community while maintaining total control over the core technology. It's a strategy that combines apparent openness with substantial control, allowing for widespread adoption without giving up the competitive advantages of closed source.

Supercar Performance, Utility Car Price

The pricing model for Qwen3-Max-Preview follows a tiered structure based on the tokens used. Costs start at $0.861 per million input tokens in the 0-32K range, rising to $2.151 per million in the highest 128K-252K token range. Output tokens follow a similar progression but with increased costs, reaching up to $8.602 per million in the highest range.

This pricing structure reveals a sophisticated business strategy: making the model economically accessible for simple and short tasks, but significantly scaling the costs for intensive, long-context uses. It's a move that democratizes access to basic capabilities while maintaining attractive margins on more complex enterprise use cases.

The quality-to-price ratio, especially in the lower usage tiers, appears competitive with equivalent Western models, potentially opening up new markets for developers and small businesses that were previously excluded from the frontier AI ecosystem.

Beyond Benchmarks: AI that Simulates Life

But where Qwen3-Max-Preview shows its true potential is in practical uses that go beyond standardized tests. The model demonstrates emergent capabilities in structured reasoning, complex code generation, handling of structured data, and advanced agentic behaviors.

Particularly interesting are the demos showing simulations of populations and complex systems, applications that require not only computational power but also a sophisticated understanding of systemic dynamics. It's like watching Ghost in the Shell and realizing that the film's social simulations may no longer be science fiction.

The extended context window allows for applications that were previously unthinkable: analysis of entire novels, processing of complex databases, coding sessions that maintain context for thousands of lines of code. These are use cases that transform AI from a one-off assistant to a persistent work partner.

Towards Post-Trillion Models

However, surpassing the trillion-parameter threshold forces us, in our opinion, to a critical reflection: the race for pure computational power, although it has produced extraordinary results, is showing its flank to unsustainable costs on all fronts. Not only economic (the training of these giants requires investments of tens if not hundreds of millions of dollars), but above all environmental, due to monstrous energy consumption, and strategic, due to the dependence on increasingly scarce chips and resources.

The future, as also discussed in the analysis of our latest article on the "Deep Think with Confidence" technique, cannot be a further dimensional escalation. True progress will lie in a more "intelligent" and less resource-hungry approach: hybrid architectures that combine smaller but specialized foundation models, structured reasoning techniques to reduce superfluous calculations, and a focus on pure efficiency through model merging and distillation. The real engineering challenge is not to train a 2T parameter model, but to achieve the same (or better) performance with a tenth of the resources.

The next milestone will not be a bigger number, but a better algorithm. The next "surprising" release from the Qwen team could, at least we hope, be a step in this direction: to demonstrate that artificial intelligence can evolve without devouring the world.

The New Balance of Power

The arrival of Qwen3-Max-Preview redraws the geopolitical map of artificial intelligence. For the first time, a Chinese model competes directly with American flagships not only in terms of performance but also in commercial accessibility and deployment speed.

Alibaba's strategic choice to focus on a trillion-parameter model while Western competitors were exploring efficiency at reduced parameters is now proving to be a winning bet. It is the demonstration that in the world of AI, as in Kasparov's chess against Deep Blue, a counter-current strategy can completely overturn expectations.

The impact on the enterprise market will likely be significant, especially in Asia where Alibaba Cloud already has a consolidated presence. The combination of high performance, competitive pricing, and native integration with the Chinese cloud ecosystem creates an offer that is difficult for companies in the region to ignore.

The shift to closed source, though controversial, could signal a maturation of the industry towards more sustainable business models. While this limits open-source innovation, it also guarantees the resources needed to support long-term research on ever-increasing computational scales.

Qwen3-Max-Preview is not just a new AI model: it is the manifesto of a new era in which technological supremacy is no longer the exclusive preserve of Silicon Valley. The dragon has awakened, and the world of artificial intelligence will never be the same.