DeepSeek OCR: When an Image is Worth 10,000 Tokens

We usually avoid chasing every announcement from big tech companies, unless it's a true innovation. And this time, it really seems to be the case. DeepSeek-OCR, released on October 20, 2025, has garnered over 7,000 stars on GitHub in just a few days and has captured the attention of Andrej Karpathy, former AI director at Tesla and a legendary figure in the field of deep learning. It's no small feat when one of the brightest minds in computer vision calls a model "quite interesting" and starts philosophizing about the future of tokenizers. But what's so special about this 3-billion-parameter model that has sparked such enthusiasm?

The Architecture of Reversal

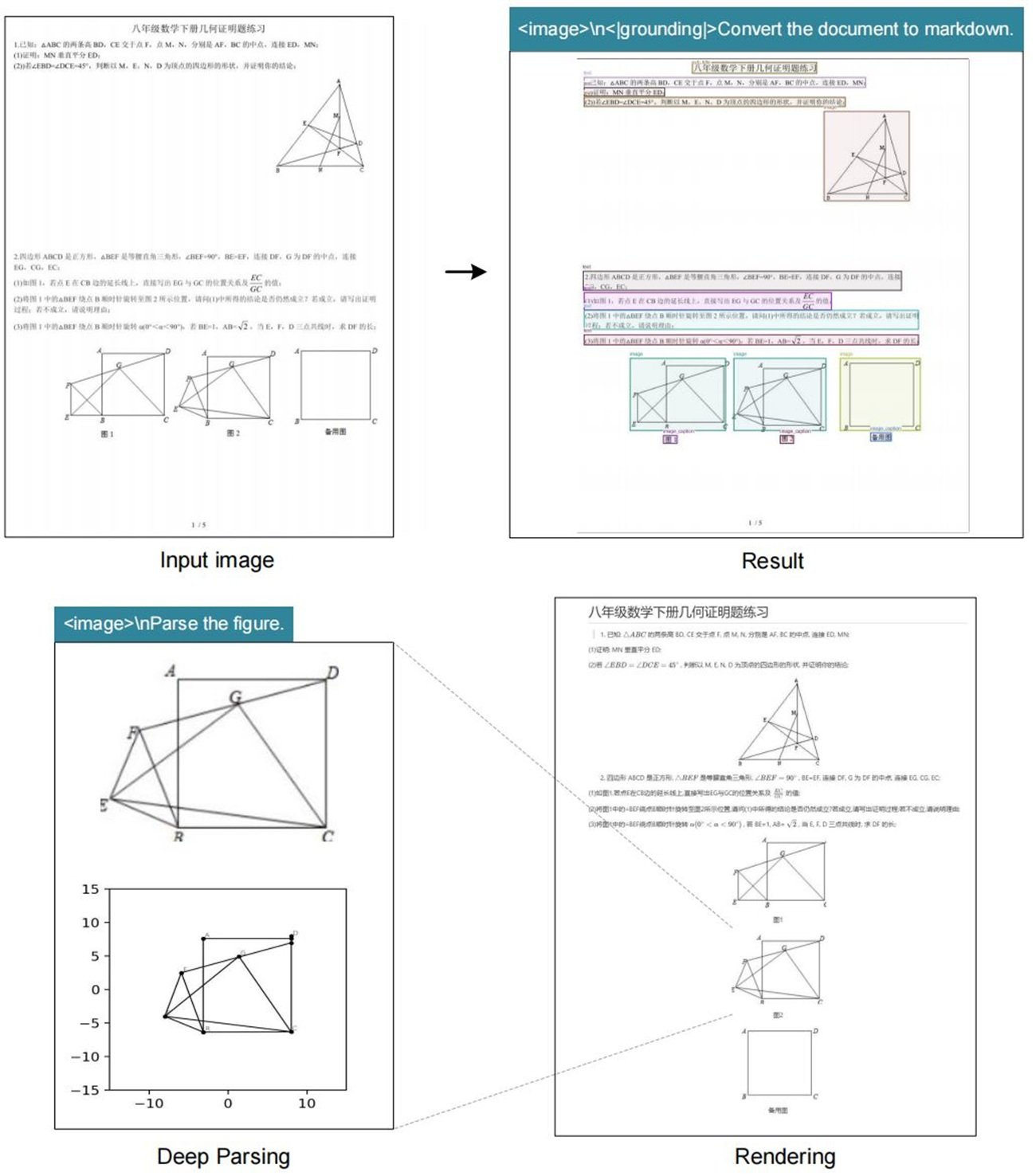

DeepSeek-OCR overturns a seemingly established paradigm: instead of converting images to text and then tokenizing it, it transforms text into an image and compresses it optically. It's as if someone looked at the Rosetta Stone backward and realized that hieroglyphics are more efficient than the alphabet. The idea is as simple as it is counterintuitive, and it recalls that scene from Tenet where bullets travel back in time: here, tokens turn back into pixels.

The architecture is divided into two main components. The DeepEncoder takes a high-resolution document, processes it through a visual encoder based on SAM and CLIP, then compresses it using a convolutional module that drastically reduces the number of tokens required. The decoder is a 3-billion-parameter Mixture-of-Experts model that interprets these compressed "vision tokens" and produces structured output.

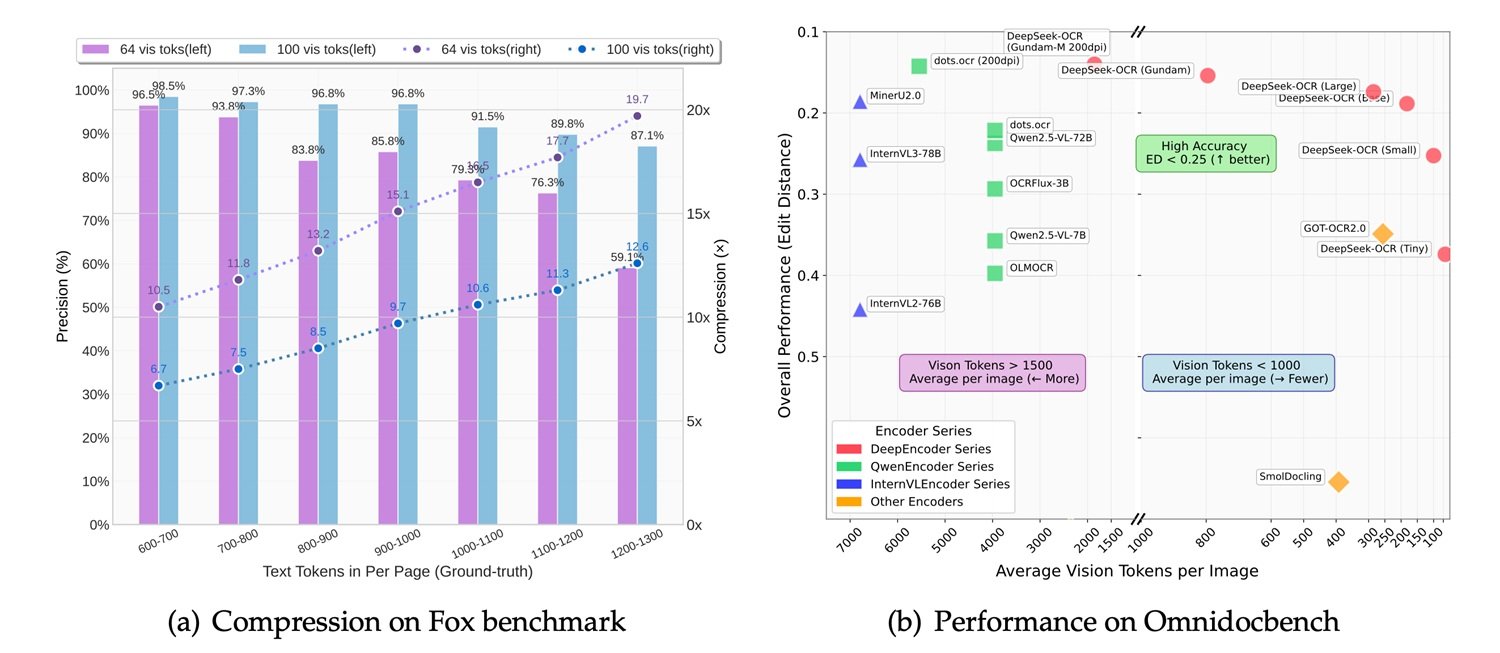

The numbers tell an interesting story: with 10x compression, the system maintains 97% accuracy. Pushing the ratio to 20x, the accuracy drops to 60%, but for many use cases, it remains more than acceptable. This means that a thousand-word article, which would require about a thousand tokens in text format, can be represented with just 100 vision tokens while keeping the information almost intact. DeepSeek claims that a single NVIDIA A100 GPU can process 200,000 pages per day with this system, a throughput that dwarfs traditional OCR pipelines.

Benchmarks and Comparisons: Where It Shines (and Where It Doesn't)

The comparison with the competition reveals both light and shadow. On OmniDocBench, the benchmark that tests extraction capabilities on complex documents, DeepSeek-OCR performs well but doesn't dominate. GOT-OCR 2.0, developed by Peking University, remains superior in terms of pure accuracy, especially on documents with complex layouts or mathematical formulas. MinerU 2.0, another Chinese contender, shows similar performance but with a more traditional architecture.

In the comparison with generalist multimodal vision-language models, the situation becomes more interesting. MiniCPM-V 2.6, InternVL 2.5, and the recent Mistral OCR are all larger models, with parameters ranging from 7 to 20 billion. DeepSeek-OCR, with its 3 billion, plays in a different league. As Karpathy himself notes, the model is "maybe a bit worse than dots" (likely referring to Gemini or other closed systems), but the admission is sincere and symptomatic: it's not a matter of absolute supremacy, but of relative efficiency.

IBM Docling, another open-source solution for document analysis, adopts a hybrid approach with modular pipelines and achieves excellent results on technical and scientific documents, but it requires more computational resources. Microsoft Florence-2, despite being a more generic vision model, shows decent OCR capabilities but struggles in situations where preserving the document's structure is necessary.

The true strength of DeepSeek-OCR emerges in specific use cases: long documents, batch processing, applications where speed is critical and a slight loss of accuracy is tolerable. It's the technological equivalent of choosing a mirrorless camera over a medium format one: less perfect, but much more versatile and practical.

From Theory to Practice: Where It's Really Needed

But when does it make sense to implement DeepSeek-OCR in a real project? The answer depends on the specific context. The most promising applications involve scenarios where volume and speed matter more than absolute perfection. Think of digitizing historical paper archives, where millions of pages need to be converted into a searchable format: here, the ability to process 200,000 pages per day on a single GPU makes the difference between a feasible project and an economically unsustainable one.

In the enterprise world, automatic data extraction from invoices, receipts, or accounting documents represents another fertile ground. Companies like Dataconomy highlight how law firms and compliance departments could benefit from the massive analysis of contracts, where maintaining the visual structure of the document is as crucial as extracting the text. A lawyer searching for a specific clause in ten thousand non-disclosure agreements doesn't need 99.9% accuracy, but to quickly find the relevant documents.

However, a shadow looms over these scenarios: the lack of transparency about the training data. DeepSeek has not released details on the dataset used to train the model, and this is a non-trivial problem. An OCR trained primarily on Chinese financial documents might misinterpret European invoices, just as one predominantly exposed to printed texts might struggle with handwriting. The opacity of the data makes it difficult to assess a priori whether the model is suitable for a specific use case, forcing empirical tests that not everyone can afford.

Open Source Philosophy in the Time of the Chip War

The decision to release DeepSeek-OCR completely open source, with model weights downloadable from Hugging Face and code on GitHub, clashes violently with the fate of DeepSeek's R2 model, still in limbo. The geopolitical context explains everything: after the viral success of DeepSeek-R1 in early 2025, Chinese authorities pushed the company to abandon NVIDIA GPUs in favor of Huawei's Ascend chips for training R2.

The result was a technical disaster. According to the Financial Times, DeepSeek failed to complete a single successful training run on Huawei chips, despite a team of engineers being sent to the site. The Trump administration had banned the export of NVIDIA's H20 to China in April 2025, and DeepSeek found itself caught between American sanctions and Chinese government pressure. CEO Liang Wenfeng, dissatisfied with R2's performance, had to choose: technological patriotism or concrete results.

In this scenario, releasing DeepSeek-OCR as open source becomes a multidimensional strategic move. First, it bypasses hardware limitations: a 3-billion-parameter model can run on consumer hardware, reducing dependency on datacenters full of unobtainable GPUs. Second, it builds soft power: while R2 languishes on DeepSeek's servers, OCR wins over developers worldwide. Third, it bypasses restrictions: an open-source model cannot be effectively "banned," it can only be replicated and improved by the community.

It's the same strategy Meta used with Llama: if you can't win on the closed commercial front, open everything up and let the ecosystem do the work. As Dataconomy reports, the model reached 4,000 stars on GitHub in less than 24 hours, a viral adoption that no marketing campaign could buy.

The Future of OCR: Vision vs. Text

The most provocative reflection comes from Karpathy himself, who in his X thread raises a philosophical question: "Maybe it would make more sense if all LLM inputs were always and only images." It's a statement that sounds heretical to those who have spent years perfecting tokenizers and text embeddings.

Karpathy lists four arguments: greater information compression, a more general data stream that includes formatting and colors, the ability to use bidirectional attention instead of autoregressive, and the elimination of the "ugly tokenizer" with all its Unicode, security, and encoding problems. His point is simple: a smiling emoji should be represented as a smiling face, pixels and all, not as an abstract token that has lost all visual connection to its original meaning.

Xie Saining, an assistant professor at New York University, agrees with this vision of convergence between computer vision and natural language processing. But the enthusiasm must be tempered with realism. Text tokenizers have existed for decades for a reason: they are efficient for pure natural language. Text rendered as an image, even compressed, takes up more space than a good BPE tokenizer for purely textual content.

The real use case is hybrid: documents where layout, formatting, and visual structure are an integral part of the meaning. Legal contracts where indentation matters. Financial reports where tables and graphs coexist with text. Scientific articles full of equations. In these scenarios, DeepSeek-OCR shines because it preserves the visual context that a text parser would destroy.

On the other hand, for a chat conversation or a simple text prompt, converting everything to an image is a waste. It's like using an oscilloscope to measure temperature: technically possible, but absurd. Simon Willison notes that DeepSeek-OCR works best when combined with other tools, not as a universal substitute.

The debate is reminiscent of the one between vinyl and digital in music: text purists argue that symbolic representation is cleaner, while pixel visionaries say that only the image captures the totality of the information. The truth, as always, lies in the middle: native multi-modality, where models can dynamically choose the best representation for each type of input.

Conclusions: Innovation or an Elegant Workaround?

DeepSeek-OCR is both. It's genuine innovation in its approach to contextual compression, with an architecture that challenges established assumptions about how to represent textual information. But it's also a brilliant workaround for concrete constraints: few GPUs, political pressure, the need for extreme efficiency.

The model on Hugging Face runs at 2,500 tokens per second on an A100-40G, an impressive performance considering the complexity of the task. Developers can easily integrate it into their pipelines, and the open-source license allows for modifications and adaptations. For those working with large volumes of documents, it could be the solution they were looking for.

However, the critical aspects should not be ignored. The accuracy is not better than the state of the art, as admitted by Karpathy himself. The quality of the training data, crucial for any OCR system, remains opaque in the official documentation. And the model is optimized for Chinese and English documents, with limited support for other languages.

The viral success on GitHub and the community's enthusiasm suggest that DeepSeek has struck a nerve: the desire for efficient, open, and pragmatic tools in an era of ever-larger and more expensive models. While tech giants compete over who has the largest datacenter, DeepSeek shows that innovation can still happen in the corners, finding efficiency where others see only the need for more brute force.

Like that final scene in Ghost in the Shell where Motoko Kusanagi merges with the Puppet Master, perhaps the future of AI is not a total victory for text or vision, but a hybrid synthesis where both coexist and complement each other. DeepSeek-OCR is a step in that direction, imperfect but fascinating, pragmatic yet visionary. And above all, it's open source: which means that in six months, some brilliant teenager will have probably already solved the problems that seem limiting today. This, after all, is the true power of open source: not perfection, but infinite iteration.