Silicon Valley: $100 Million Against AI Regulation

As Washington prepares for one of the most significant regulatory battles of the 21st century, Silicon Valley has decided to play its best cards. Like in a poker game where every move can determine the future of an entire industry, tech giants have put a figure on the table that would make even Scrooge McDuck pale: over $100 million aimed at influencing the 2026 midterm elections.

The strategy is certainly not new in the American political landscape, but the scope and surgical precision of this operation are more reminiscent of House of Cards tactics than traditional election campaigns. At the center of this maneuver is Leading the Future, a super-PAC launched this month that represents the most ambitious tech lobbying effort ever seen in America.

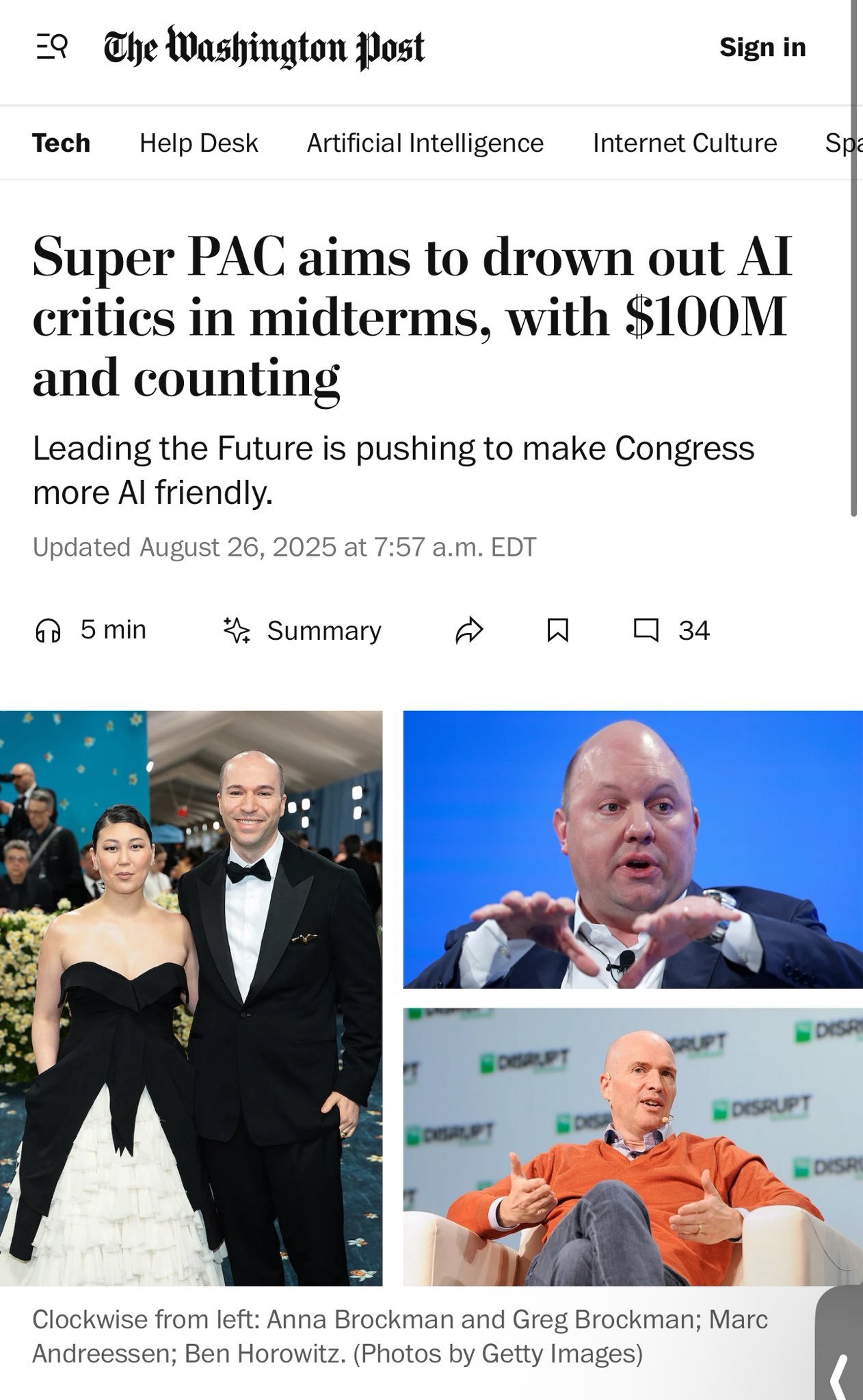

The Godfathers of Innovation

Leading this anti-regulation crusade are names that could easily come out of an episode of Mike Judge's Silicon Valley: Andreessen Horowitz, the venture capital fund that has turned more startups into unicorns than Dungeons & Dragons has created, and Greg Brockman, president of OpenAI and one of the brains behind ChatGPT. They are not alone in this battle: Ron Conway of SV Angel, Joe Lonsdale of 8VC Management, and even Perplexity AI have joined forces in what appears to be the Justice League of technology.

The organizational structure of Leading the Future is as sophisticated as a machine learning algorithm. The super-PAC utilizes a network that includes direct donations, state-level PACs, a 501(c)(4) arm, and digital advertising campaigns to support pro-AI candidates and hinder those they see as obstacles to innovation. It is a multi-level approach that touches both federal and state politics, recognizing that the future of artificial intelligence is decided as much in Washington as it is in individual states.

The choice of protagonists is not accidental. Andreessen Horowitz is not only one of the most influential venture capital firms in the Valley, but it also has a long history of strategic investments in AI companies. The presence of Brockman, a central figure in the OpenAI ecosystem, gives the coalition a technical credibility that goes beyond mere financial weight. It's like having both the producers and directors of a major Hollywood studio joining forces to influence the rules of cinema.

The Divide and Conquer Strategy

The most interesting aspect of this operation is its bipartisan nature. Leading the Future will support both Democrats and Republicans, as long as they share an "innovation-friendly" view of AI regulation. It is a shrewd move reminiscent of the pharmaceutical industry's tactics in the 90s: instead of siding with one party, it focuses on specific individuals who can influence key decisions.

The geographical targets are just as strategic. California, New York, Illinois, and Ohio represent not only some of the most important markets for technology, but also states with particularly active legislators on the AI regulatory front. It's like playing Risk, but with real consequences for billions of dollars in investments and thousands of jobs.

California, in particular, represents a crucial battleground. The state has already passed over 18 AI-related laws in 2024 alone, creating one of the most complex regulatory frameworks in the world. The recent battle over SB 1047, the controversial AI safety bill later vetoed by Governor Newsom, has shown how delicate the balance between innovation and regulation is.

Newsom's veto was a temporary victory for the industry, but the proliferation of legislative proposals shows that regulatory pressure is far from diminished. Assembly Bill 2885, which aims to unify the definition of "Artificial Intelligence" across various California laws, is just one example of how legislators are trying to create a coherent regulatory framework for a rapidly evolving sector.

Meta Plays Its Own Game

In parallel with the creation of Leading the Future, Meta has launched its own super-PAC focused on California, the state that hosts the headquarters of Zuckerberg's company. This move suggests an even more sophisticated strategy: while Leading the Future operates nationally, Meta focuses on its own backyard, supporting candidates who favor "light" AI regulation across party lines.

Meta's choice to operate separately from Leading the Future may reflect strategic differences or simply the desire to maintain direct control over messaging and supported candidates. Like a chess game where each piece has its specific role, this dual strategy maximizes the chances of success on multiple fronts.

Meta's approach is particularly interesting given the company's unique position in the AI ecosystem. Unlike pure-plays like OpenAI, Meta must balance the interests of AI with those of its core social media businesses, which are already under intense regulatory pressure. It is a multi-dimensional game reminiscent of the complex geopolitical balances of Game of Thrones.

The Crypto Model: Lessons from the Past

According to some analysts, the AI industry is following the playbook developed by the cryptocurrency sector, which in recent years has invested heavily in political lobbying to avoid stringent regulations. The crypto strategy has shown that targeted investments can be extremely effective in shaping the political agenda, especially when it comes to technologies that legislators struggle to fully understand.

However, AI presents unique challenges compared to cryptocurrencies. While crypto has remained largely confined to the financial and tech worlds, artificial intelligence is pervading every aspect of society, from healthcare to education, from national security to daily work. This makes the regulatory battle more complex and the consequences more profound.

The crypto sector invested over $200 million in the 2024 elections, achieving significant results in electing favorable candidates. The AI industry, with even larger budgets, is applying similar lessons but on an unprecedented scale. It is the natural evolution of a strategy that has already proven its effectiveness, optimized for a sector with even broader and more diversified interests.

The Regulatory War: States vs. Federal

The AI battle is being fought simultaneously on multiple fronts, creating a complexity reminiscent of Westworld's narrative structure. On one hand, states are accelerating their regulatory efforts. Republicans in Congress are pushing to block the application of state AI regulations, jeopardizing California's protections on artificial intelligence in healthcare, hiring, and more. On the other hand, the federal administration seeks to balance innovation and security through executive orders and federal agency guidelines.

This federal-state tension is not new in American history, but it takes on particular contours when applied to AI. State regulations tend to be more specific and aggressive, while the federal approach favors general principles and voluntary collaboration with the industry. It is a dichotomy that could determine not only the future of American AI, but also the global competitiveness of the sector.

California, with SB 243 aiming to regulate the use of chatbots for psychological support, represents the vanguard of this granular approach. The law requires chatbots to clearly notify users that they are not human and prohibits practices designed to create addiction. It is a level of regulatory detail that would be unthinkable at the federal level, but that could become the template for other states.

The Stakes: Global Competition

The concerns driving Silicon Valley to this unprecedented mobilization are concrete and immediate. The stated goal is to oppose policies that "stifle innovation" and favor China in the global AI race. It is an argument that resonates particularly strongly in an America increasingly concerned about technological competition with Beijing.

The "global competition" narrative is not accidental. Just as in the 60s the space race became a symbol of American technological supremacy, today AI represents the new battlefield for economic and strategic dominance. Supporters of Leading the Future argue that excessive regulations could handicap American companies compared to their Chinese competitors, who operate in a more permissive regulatory environment.

The comparison with Europe is just as instructive. The European AI Act represents the most comprehensive regulatory framework in the world, but according to American critics, it could stifle innovation. Studies show how the EU and the US are diverging significantly in their approach to AI governance, with Europe prioritizing ethics and security and the US focusing on competitiveness.

Europe has chosen to focus on ethics and regulation, prioritizing "human-centric" and "trustworthy" AI models, while the United States and China are racing ahead in both civilian and military applications. This divergence is creating what some experts call a global "trilemma" of AI: security, innovation, and competitiveness seem to be increasingly difficult goals to pursue simultaneously.

Critics and Counterarguments: The Other Side of the Coin

There is no shortage of critical voices regarding this massive lobbying operation. Many tech ethics experts and Democratic policymakers see this move as an attempt at "corporate overreach" that prioritizes profits over safety. The argument is that AI, unlike previous technologies, presents existential risks that require proactive rather than reactive regulation.

Concerns range from data privacy to national security, from job displacement to the risks of algorithmic bias. As in Minority Report, where predictive technology raises complex ethical questions, modern AI presents dilemmas that go far beyond market efficiency. Critics argue that the industry's push for "light-touch" regulations deliberately ignores the systemic risks that AI can pose.

Particularly sharp are the criticisms from civil rights advocacy groups and non-profit organizations focused on ethical technology. These organizations argue that Leading the Future's bipartisan approach is actually an attempt to neutralize political opposition through co-optation rather than direct confrontation on the merits of the proposed policies.

Another controversial aspect concerns transparency. While super-PACs must disclose their donors, the complex structure of Leading the Future, which includes 501(c)(4) organizations—social welfare and non-profit welfare organizations exempt from federal taxes in the United States, operating primarily to promote the common good and general welfare of the community—are not subject to the same disclosure requirements. This raises questions about the real transparency of the flow of money. It is a criticism reminiscent of the controversies surrounding "dark money groups" that have characterized American elections in recent decades.

Perfect Timing: Anatomy of a Strategy

The choice of 2026 as the target year is not accidental. Midterm elections historically see lower voter turnout, making targeted investments more effective in determining outcomes. Furthermore, 2026 will likely be the year when many of the most pressing AI regulatory issues come to a final decision, both at the federal and state levels.

The super-PAC will use a combination of traditional donations and digital advertising campaigns to maximize the impact of its investments. In an era where public attention is fragmented across a thousand different channels, this multi-channel strategy represents the natural evolution of political advertising.

Leading the Future's strategic approach reflects a sophisticated understanding of the American political landscape. Instead of betting everything on a handful of high-profile federal races, the strategy focuses on state and local elections where the marginal impact of advertising investments can be more significant. It is the difference between bombarding with television advertising during the Super Bowl and investing in precise targeting on social media.

The legislative calendar also plays a crucial role. Many of the most significant AI laws are currently under discussion or implementation, and 2026 represents a critical transition point. The elections will determine not only who writes the next laws, but also who oversees the implementation and enforcement of existing ones.

The Ecosystem of Supporters: Beyond the Big Names

While the best-known names like Andreessen Horowitz and Greg Brockman capture media attention, the support ecosystem for Leading the Future is much broader and more diverse. It includes second and third-tier venture capitals, AI startups seeking regulatory protection, and even academics and researchers concerned that excessive regulations could limit scientific research.

This diversity reflects the pervasive nature of AI in the modern economy. Unlike more concentrated sectors like energy or telecommunications, AI touches virtually every aspect of economic activity, from financial services to healthcare, from education to entertainment. This breadth of interests creates a naturally broader but also potentially more fragile coalition.

A particularly interesting aspect is the involvement of companies that might seem to be direct competitors. The fact that entities like Perplexity AI, which competes directly with Google's search services, and venture capitals that have invested in OpenAI's competing startups participate in the same coalition shows how existential the regulatory risk is perceived to be.

The International Dimension: Looking Beyond Borders

The American regulatory battle is not taking place in a vacuum, but is part of a global context where different models of AI governance are emerging. The comparison between the American and European approaches highlights fundamentally different philosophies: while Europe favors a precautionary, rights-based approach, the United States focuses on a model that promotes innovation and competitiveness.

This divergence has profound implications for global companies that must navigate increasingly complex regulatory frameworks. The European AI Act, with its stringent requirements for auditing and transparency, is already influencing business practices far beyond the EU's borders. It is the Brussels effect applied to artificial intelligence, where European regulations become de facto global standards.

China represents the third model, characterized by more direct state control but also greater flexibility in implementation when it comes to supporting national champions. This regulatory trilateralism is creating an increasingly fragmented landscape, where companies must develop specific compliance strategies for each market.

The geopolitical implications are clear. In a world where AI is increasingly seen as a strategic technology, national regulatory choices become instruments of soft power. The ability of the United States to maintain leadership in AI will depend not only on the quality of research and investment, but also on the wisdom of its regulatory choices.

The Impact on Startups

One of the less discussed dimensions of the regulatory battle concerns the differential impact on companies of different sizes. While giants like Google, Microsoft, and Meta have the resources to navigate complex regulatory frameworks, AI startups could find themselves significantly disadvantaged by burdensome regulations.

This creates an interesting paradox: while Leading the Future presents itself as a defender of innovation in general, it may end up protecting primarily the interests of the large players who have the resources to support the operation. It is a dynamic reminiscent of the regulation of the pharmaceutical sector, where compliance costs have gradually raised barriers to entry, consolidating the power of large corporations.

On the other hand, many startups see predictable and clear regulations as a competitive advantage. Instead of the current regulatory jungle, where each state could develop different rules, a coherent federal framework could reduce compliance costs and create a more level playing field.

The tension is palpable in interviews with AI startup founders: many support the idea of sensible regulations but fear that the political process could produce rules written by and for the industry giants. It is a legitimate concern in a sector where access to capital and legal expertise can determine a company's survival.

Towards the Future: Scenarios and Implications

Looking to 2026 and beyond, several possible scenarios emerge for the evolution of American AI regulation. In the best-case scenario for the industry, Leading the Future and similar initiatives succeed in electing a critical mass of favorable legislators, creating a stable regulatory environment that fosters innovation without excessively compromising security.

The worst-case scenario would see even greater fragmentation, with states developing incompatible regulations and a federal government paralyzed by political opposition. This would create not only uncertainty for companies, but also opportunities for international competitors to exploit the American confusion.

The most likely scenario is a middle ground: a mosaic of regulations that reflects American political complexity, with some states adopting more restrictive approaches and others more permissive ones, while a minimum common denominator framework gradually emerges at the federal level.

Regardless of the specific outcome, the massive investment by Leading the Future marks a turning point in the political maturity of the AI industry. It is no longer about technologists trying to avoid politics, but about a sector that has learned to play the power game by Washington's rules.

Conclusions: The Future is Now

As in any good science fiction saga, the real conflict is not about the technology itself, but about who controls that technology. The battle that is shaping up for 2026 will determine not only the future of American AI, but potentially the role of the United States in the 21st-century global economy.

Leading the Future and parallel initiatives like Meta's super-PAC represent an unprecedented experiment in American politics: never before has a single industrial sector invested such massive sums with such specific objectives. The success or failure of this strategy could redefine the relationship between technology and politics for generations to come.

The $100 million is just the appetizer for what could become a much larger investment if the strategy proves effective. Other tech sectors are already watching Leading the Future's approach with interest, evaluating whether to replicate it for their own regulatory battles.

But there is a deeper question that this mobilization raises: in a democracy, what is the appropriate relationship between economic power and political influence? Silicon Valley, with its unprecedented concentration of wealth and technological talent, is redefining the traditional boundaries of this balance.

Ultimately, while the $100 million makes headlines, the real stakes are much higher: control of the narrative around artificial intelligence and, consequently, the power to shape a future where AI will no longer be science fiction, but a daily reality for billions of people. Whether this future is characterized by free innovation or democratic control will depend, in large part, on what happens in the 2026 elections. And Silicon Valley has decided it will leave nothing to chance.